Why VPS Hosting Is the Smart Choice for Finan

Why VPS Hosting Is the Smart Choice for Financial Data ...

AI is transforming how data centers operate by automating tasks that were once manual and error-prone. Key benefits include reduced costs, better energy efficiency, and fewer outages. Here's how AI is making an impact:

With global electricity use by data centers expected to double by 2027, AI offers practical solutions to avoid waste, improve reliability, and lower operational costs. Companies like Google and Microsoft have already achieved significant savings through AI-driven initiatives.

AI is reshaping how data centers operate by replacing outdated, manual processes with intelligent systems that predict issues, optimize resources, and reduce energy waste. This transformation is particularly evident in three key areas: maintenance, resource allocation, and energy management. Together, these advancements tackle long-standing challenges that traditional methods struggle to address effectively. Let’s dive into how AI is making a difference.

The old model of fixed-schedule maintenance often leads to unnecessary part replacements or, worse, overlooked failures. AI changes the game by analyzing real-time sensor data to predict each component's Remaining Useful Life (RUL). This allows teams to perform repairs exactly when needed - before a failure happens - rather than relying on arbitrary schedules.

This shift to proactive maintenance delivers impressive results. AI-powered systems can reduce downtime by 35–45%, prevent 70–75% of unexpected breakdowns, lower maintenance costs by 25–30%, and extend asset lifespans by about 40% [8]. Planned maintenance is also far more efficient, requiring 3.2 times less labor compared to emergency repairs [8].

Real-world examples highlight the impact. In 2026, Shell’s AI platform identified two critical equipment failures in advance, saving about $2 million and avoiding major disruptions [8]. Similarly, a Fortune 500 manufacturer cut unplanned downtime by 45%, saving $2.8 million annually with AI-driven predictive maintenance [8]. AI tools like computer vision systems are also stepping in to detect physical issues - like frayed cables or deteriorating server components - that human inspectors might miss [4].

By shifting from reactive to predictive operations, data centers are achieving higher reliability while keeping costs under control.

Static resource allocation is a money sink. Servers often sit idle during off-peak hours, yet data centers still prepare for worst-case scenarios. In fact, 32% of cloud spending is wasted due to inefficiencies [4]. AI solves this problem with composable infrastructure that dynamically assigns compute, GPU, storage, and networking resources based on real-time demands.

Machine learning models can forecast workload patterns and redistribute tasks to underused zones or periods of lower energy prices. A great example is Microsoft Azure, which in 2025 used Gradient Boosted Trees and Random Forest models to predict server loads across its global network. By reallocating workloads to underutilized areas and off-peak hours, the platform cut energy use by 10% [6]. Similarly, Alibaba Cloud leveraged neural network-based AI to balance energy between the grid, battery storage, and renewable sources, achieving 8% energy savings and a 5% cut in carbon emissions [6].

"These modern tools are a godsend for IT operations teams... we've seen intelligent AI automation cut planned spending on hardware and additional resources in half." - Kyle Brown, IBM Fellow [4]

This dynamic approach not only saves money but also sets the stage for smarter energy usage, as explored in the next section.

Cooling systems are one of the biggest energy drains in data centers, often accounting for nearly 40% of total energy consumption. AI tackles this challenge with reinforcement learning agents that continuously adjust cooling settings - like water flow and temperature - based on real-time server loads and heat patterns. Google’s DeepMind system, for instance, delivered a 40% reduction in cooling energy and improved Power Usage Effectiveness (PUE) by 15% using real-time telemetry data [6].

AI also enables smarter workload scheduling based on grid conditions. Between December 2024 and March 2025, the Scripps Institution of Oceanography piloted Dell’s "Concept Astro" AI system. By scheduling image-processing tasks (350 GB per dive) during optimal energy windows, they achieved a 20% cost reduction and cut carbon emissions by 32% [9][7]. This system uses live grid data to prioritize workloads during periods of high renewable energy availability and lower costs.

Next-gen servers can already lower CPU power consumption by up to 65% compared to older models [9][7]. But AI takes this further by predicting power usage patterns and ensuring resources are only deployed when truly needed. This is critical as some experts predict data center energy consumption could double by 2030 [9].

When it comes to leveraging AI for workflow automation, a step-by-step approach is key. By rolling out changes gradually rather than overhauling entire systems, organizations can integrate AI while minimizing disruptions. It’s no surprise that 80% of executives plan to automate their IT networking operations within the next three years [4]. This phased method builds on AI's established advantages in areas like predictive maintenance, resource management, and energy efficiency.

The first step is to evaluate your existing processes and identify where AI can make the biggest impact. Start by defining your operational goals - whether it’s lowering energy expenses, avoiding hardware failures, or streamlining resource allocation [2].

Take stock of your data sources, such as DCIM, BMS, ITSM, and IoT sensors [2][10]. The quality of your AI-driven results hinges on the accuracy and completeness of the data you provide.

Pinpoint operational bottlenecks that could benefit from automation. Tasks like real-time power management, cooling system adjustments, and hardware lifecycle monitoring are excellent candidates [2]. Additionally, consider whether fragmented systems across environmental, electrical, mechanical, and IT domains are limiting your ability to see the bigger picture. Tools like Universal Intelligent Infrastructure Management (UIIM) can help bridge these gaps [10].

Focus on high-impact use cases. For example, AI-driven Condition-Based Maintenance (CBM) can cut on-site maintenance visits by up to 40% and reduce total ownership costs by 20% [5]. Since human error is responsible for 66% to 80% of data center outages [5], automating tasks like predictive maintenance for UPS batteries and cooling systems can deliver immediate results.

| Functional Area | AI Automation Opportunity | Key Benefit |

|---|---|---|

| Power Management | Real-time utility selection based on cost or carbon | Lower power costs and reduced emissions [2] |

| Cooling Control | Predictive thermal analysis using weather/schedules | Energy efficiency and dynamic cooling [2] |

| Hardware Maintenance | Predictive monitoring of UPS and server lifecycles | Prevent unplanned downtime [2][5] |

| Provisioning | Automated VM and network setup | Fewer manual errors and faster deployment [2][10] |

Once you’ve identified these opportunities, the next step is integrating AI tools into your existing systems.

To ensure a smooth transition, integrate AI tools that enhance your current systems without disrupting operations. Modern AIOps platforms are designed to work alongside existing DCIM tools, improving network performance and hardware maintenance [2][4].

Start small. For instance, deploying automated cooling adjustments can yield quick returns on investment before expanding to more comprehensive predictive maintenance solutions [2].

Address security concerns by training AI models with synthetic data. This method replicates real-world scenarios while safeguarding sensitive information [2].

"Today, you can implement a common AI DevOps platform that is completely configuration-driven and automated" [4].

For AI and machine learning workloads, configure your network for lossless transport using RoCEv2 (RDMA over Converged Ethernet). This setup requires both endpoints and switches to support Quality of Service (QoS) configurations [12][11]. Tools like Cisco Nexus Dashboard Fabric Controller (NDFC) or Juniper Apstra can automate network fabric management and prevent configuration drift [11][13].

Incorporate telemetry tools to monitor network activity, identify "hot spots", and fine-tune operations in real-time. Enabling Data Center Quantized Congestion Notification (DCQCN) - a mix of Explicit Congestion Notification (ECN) and Priority Flow Control (PFC) - can prevent packet loss during congestion [11][13].

Once you’ve successfully integrated AI locally, you can scale these workflows across multiple facilities.

After proving AI’s effectiveness in one location, expand its reach through standardization and centralized management. Implement non-blocking fabric architectures, like two-tier or three-tier "any-to-any" Clos fabrics, to ensure consistent performance and bandwidth across large AI clusters [11][13].

Standardize lossless Ethernet using RoCEv2 across all sites. This enables high-speed, low-latency communication between GPUs without overloading CPUs [11]. With networking speeds advancing from 400 Gbps to 800 Gbps and even 1.6 Tbps [13], this foundation ensures scalability for future demands.

Utilize Intent-Based Networking (IBN) to automate tasks across the network lifecycle, from design (Day 0) to ongoing operations (Day 2+). This approach helps maintain configuration consistency across global facilities [11][13]. Centralize management with a platform that handles everything - from initial provisioning to daily operations across edge and core environments [3].

Train your team to stay ahead of AI advancements. 76% of executives are applying AI skills to IT operations within three years [4]. Your staff will need to understand AI algorithms, hardware configurations, and networking protocols like RoCEv2 [2]. To avoid issues like "PFC storms", configure a PFC watchdog to detect and manage problematic queues [11].

"Generative AI is core to how many modern enterprises build new digital products to make money. But what if the same technology could radically change the business processes needed to design, deploy, manage and observe those applications?"

– Richard Warrick, Global Research Lead, IBM Institute for Business Value [4]

To support cutting-edge AI workflows, a reliable and flexible cloud infrastructure is key. SurferCloud's platform is built to handle the complexities of AI-driven workflow automation, even in distributed environments.

SurferCloud's elastic compute servers are designed to handle fluctuating demands in AI workloads. Using advanced algorithms, these servers dynamically adjust computing power and storage - scaling up during high-demand periods and scaling down when activity slows [14]. This ensures resources are allocated efficiently, whether it's for intensive tasks like thermal analysis or hardware diagnostics. Additionally, AI-powered resource management detects underused or idle servers and powers them down, cutting down on energy consumption and operational costs [15].

With over 17 data centers worldwide, SurferCloud enables low-latency AI model deployment [18]. This global reach is essential as AI workloads increasingly move from centralized training hubs to distributed edge inference servers [18].

"AI must be closer to the data. This requires infrastructure that spans from core to edge - plus visibility and resilience across a complex environment." - Cisco [16]

SurferCloud's globally connected network of data centers allows seamless movement of workloads across regions. For example, predictive maintenance models can be deployed across facilities in North America, Europe, and Asia without requiring infrastructure reconfiguration [16]. A real-world example of this approach is Workday, which in 2023 developed five interconnected data centers using automated cloud fabric networks. This setup reduced deployment times by 83% while enabling smooth workload portability across global regions [16][17]. This type of centralized management simplifies operations for AI workloads across multiple locations [17].

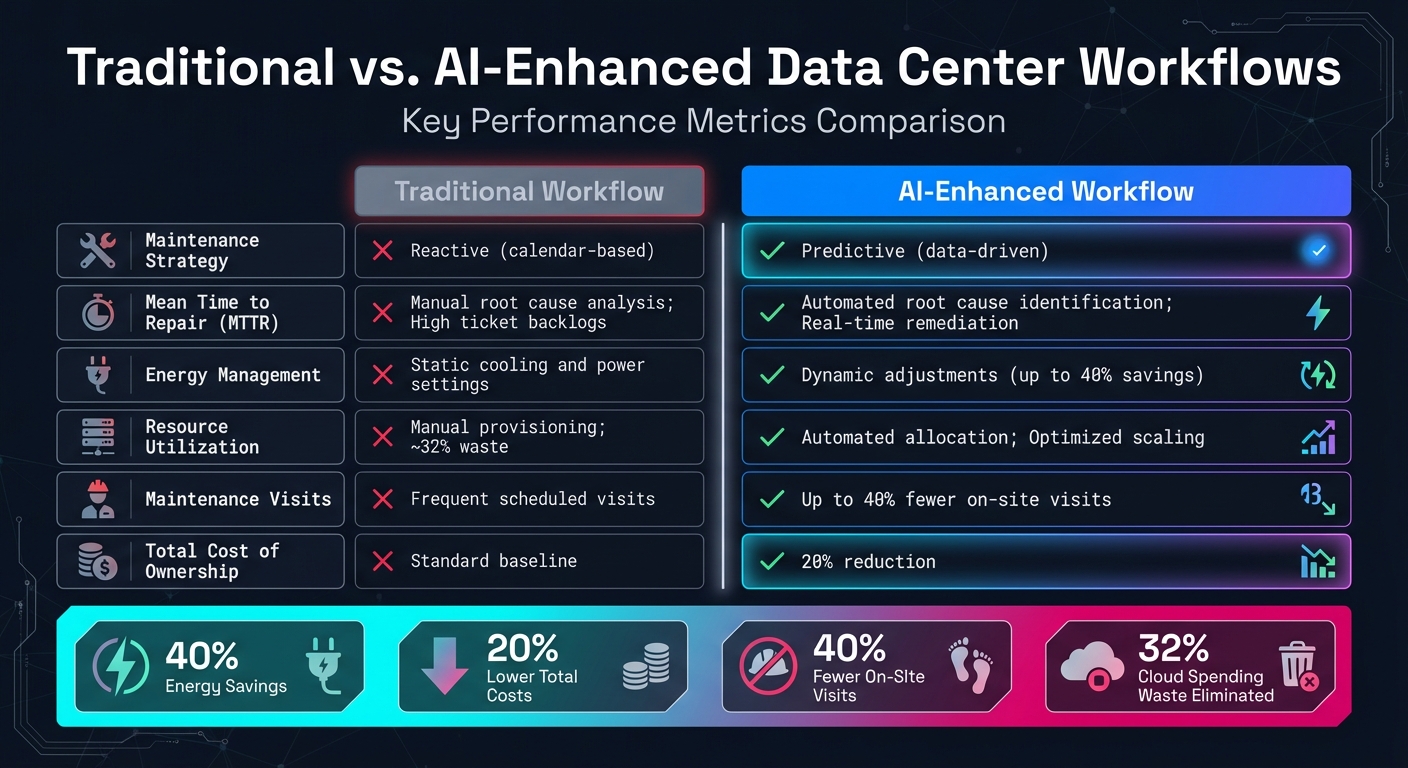

Traditional vs AI-Enhanced Data Center Workflows: Key Performance Metrics

AI is reshaping how data centers operate, turning manual, reactive processes into streamlined, intelligent systems. The results? Tangible improvements in efficiency and cost savings. For example, unplanned downtime, which can cost an average of $5,600 to $9,000 per minute, is now being mitigated by AI's ability to predict and prevent failures. Automated anomaly detection and proactive interventions also reduce the risks tied to human error [19][5].

When it comes to energy management, the impact of AI is even more striking. Systems powered by AI can dynamically adjust cooling and power usage, cutting energy bills by as much as 40% [4]. This is critical as global electricity demand from data centers is expected to more than double by 2027 [1]. AI also addresses inefficiencies in resource provisioning. Currently, 32% of cloud spending is wasted due to manual allocation mistakes - something AI solves through real-time, dynamic resource management [4].

AI is transforming maintenance operations, too. Condition-Based Maintenance (CBM) powered by AI reduces on-site visits by up to 40%, while extending equipment lifespans and optimizing interventions. This leads to a 20% reduction in total cost of ownership (TCO) [5].

"AI-powered intelligent workflows and IT automation tools are helping business leaders find competitive advantages, in terms of performance, that were eluding them before."

- Richard Warrick, Global Research Lead, IBM Institute for Business Value [4]

The difference between traditional and AI-powered workflows is stark when you look at key metrics:

| Metric | Traditional Workflow | AI-Enhanced Workflow |

|---|---|---|

| Maintenance Strategy | Reactive | Predictive [5] |

| Mean Time to Repair (MTTR) | Manual root cause analysis; high ticket backlogs | Automated root cause identification; real-time remediation [19] |

| Energy Management | Static cooling and power settings | Dynamic adjustments (up to 40% savings) [4] |

| Resource Utilization | Manual provisioning; ~32% waste | Automated allocation; optimized scaling [4] |

| Maintenance Visits | Frequent scheduled visits | Up to 40% fewer on-site visits [5] |

| Total Cost of Ownership | Standard baseline | 20% reduction [5] |

Another area where AI shines is data quality management. By identifying and eliminating redundant, obsolete, and trivial (ROT) data - such as information older than seven years or no longer required for compliance - AI reduces storage energy consumption and ensures cleaner datasets for business intelligence [1]. This not only cuts operational costs but also supports environmental goals.

These measurable outcomes highlight why businesses are increasingly embracing AI-driven solutions for data center management. The shift is no longer just a trend; it's becoming a necessity for staying competitive.

AI-powered automation is reshaping modern data centers, offering tangible benefits like 40% energy savings, 20% lower total costs, and 40% fewer on-site visits [4][5]. These improvements directly enhance both operational efficiency and reliability.

To tap into these advantages, it’s smart to start with smaller, targeted AI projects. Focus on areas like predictive maintenance, resource allocation, or energy optimization to demonstrate ROI before scaling up [2][21]. From the outset, prioritize data quality and security - AI thrives on clean, well-structured data [20]. And don’t forget to set clear goals that align with your business priorities, whether that’s minimizing downtime, cutting energy expenses, or optimizing resource use.

SurferCloud’s infrastructure is designed to simplify this process. With 17 global data centers, elastic compute servers, and 24/7 support, it provides the tools you need for seamless AI deployment. Its flexible resource scaling ensures your systems can handle high-demand periods while keeping costs in check during slower times.

With 80% of executives aiming to automate IT networking operations by 2027 [4], the real question is no longer if you should adopt AI-driven automation, but how quickly you can implement it. Early adopters will gain long-term advantages in performance, cost efficiency, and overall competitiveness.

Ready to transform your operations? Check out SurferCloud's solutions to see how AI-driven automation can work for you.

AI plays a key role in preventing equipment failures by examining real-time sensor data alongside historical performance records. With the help of machine learning models, it spots unusual patterns that could signal potential problems and predicts the chances of equipment failures before they happen.

This forward-thinking method allows data center teams to plan maintenance ahead of time, cutting down on unexpected downtime and keeping operations running smoothly. By tapping into AI's capabilities, businesses can boost reliability and help critical equipment last longer.

AI is transforming how data centers manage energy, making operations smarter and more efficient. With intelligent monitoring and predictive analytics, AI can fine-tune cooling systems, balance server workloads, and pinpoint energy inefficiencies as they happen. This approach not only cuts down on energy use but also trims operational expenses and reduces carbon emissions.

Although the exact savings depend on how it's implemented, many data centers have seen major boosts in efficiency thanks to AI-powered tools. By automating energy-heavy tasks, AI is paving the way for greener, more sustainable data center operations.

To bring AI into play for automating workflows in data centers, businesses should begin by pinpointing areas where it can make the biggest difference. Common targets include energy optimization, cooling management, and server provisioning, as these tasks tend to yield the most noticeable efficiency gains with AI. The next step is to gather and centralize key data - think power usage, temperature metrics, and network activity - to provide a solid base for building effective AI models.

With the data in place, companies can either deploy ready-made AI tools or create custom models tailored to their specific needs. Hosting these models on secure, scalable platforms like SurferCloud ensures the computational power required to keep things running smoothly. Once the AI systems are integrated with existing workflows, it’s crucial to test them in a controlled environment. Monitoring the results and fine-tuning the automation process before rolling it out on a larger scale helps minimize risks.

Finally, keeping AI models up to date with fresh data ensures they stay accurate and responsive to changes in the infrastructure. This ongoing refinement allows businesses to streamline their operations, cut costs, and prepare their data centers for future challenges.

Why VPS Hosting Is the Smart Choice for Financial Data ...

Synthetic data is reshaping AI development by solving c...

Ever wished you could directly ask questions to a PDF o...