UHost Architecture Deep-Dive: Why Dedicated v

In the world of cloud computing, there is a massive dif...

High availability (HA) ensures that cloud systems remain functional even during failures. The goal? Minimize downtime and protect critical applications. Here's what you need to know:

Best Practices: Regular testing (e.g., simulating failures), monitoring critical metrics, and optimizing configurations are essential for maintaining uptime. Platforms like SurferCloud provide tools like global load balancing, elastic compute servers, and CDN integration to support HA goals.

Bottom Line: High availability is about ensuring uninterrupted service through redundancy, proactive monitoring, and resilient design.

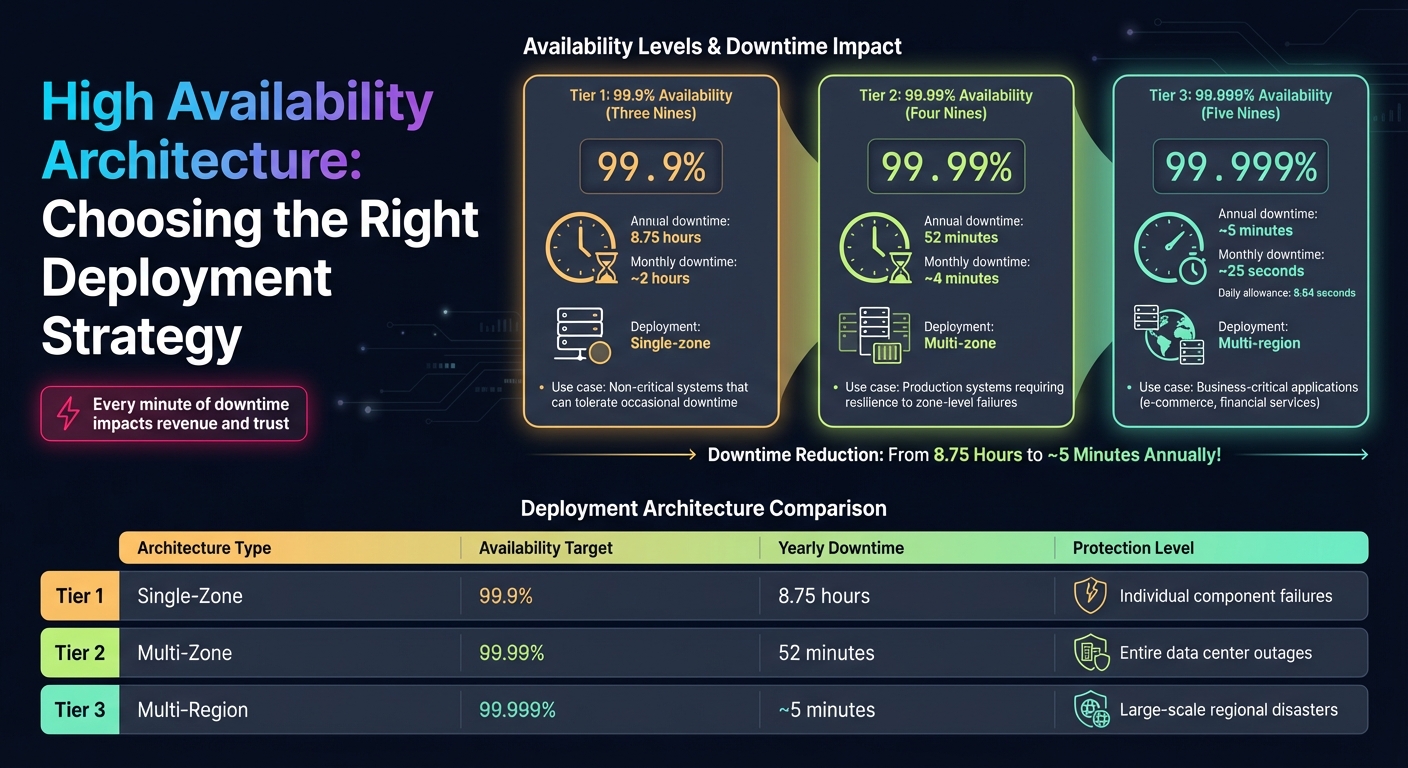

Cloud Availability Tiers: Downtime Comparison and Deployment Architecture

High availability in cloud systems rests on three main pillars: redundancy, monitoring, and automated failover. Together, these elements ensure systems remain operational even in the face of hardware failures, software glitches, or infrastructure disruptions [1].

Google Cloud captures this philosophy well:

"The guidance in this document follows one of Google's key principles for achieving extremely high service availability: plan for failure" [7].

Understanding the distinction between reliability and resilience is crucial here. Reliability refers to a system's ability to consistently perform its intended functions without interruption. On the other hand, resilience is about withstanding disruptions and recovering quickly while maintaining performance [3]. A highly available system must excel in both areas: it should function seamlessly under normal conditions and bounce back efficiently when disruptions occur.

These principles serve as the foundation for the strategies outlined below.

Load balancers play a critical role in preventing individual servers from becoming overwhelmed. They distribute incoming traffic across multiple servers or nodes, ensuring no single resource bears the entire load. Additionally, load balancers continuously monitor the health of backend resources, rerouting traffic to healthy instances whenever necessary [5][6].

This setup eliminates single points of failure and prevents bottlenecks. For example, if a server goes down, the load balancer redirects traffic to functioning servers without interrupting user access. It also simplifies maintenance - individual servers can be updated or repaired while the load balancer directs traffic to active instances.

Different algorithms manage traffic distribution based on workload needs. For instance, round-robin assigns requests sequentially, while least connections directs traffic to the server handling the fewest active sessions. The latter is particularly effective for applications where processing times vary significantly.

Deploying resources across multiple zones (isolated data centers) and regions (geographic areas) safeguards against localized outages caused by natural disasters, power failures, or infrastructure issues [1][7]. A single-zone deployment with redundant tiers typically achieves around 99.73% availability, translating to nearly 2 hours of potential downtime each month. In contrast, multi-region deployments with global load balancing can achieve up to 99.988% availability, reducing maximum monthly downtime to just about 5 minutes [9][10].

| Deployment Type | Target Availability | Yearly Downtime | Ideal Use Case |

|---|---|---|---|

| Single-zone | 99.9% | 8.75 hours | Non-critical systems that can tolerate occasional downtime |

| Multi-zone | 99.99% | 52 minutes | Production systems requiring resilience to zone-level failures |

| Multi-region | 99.999% | ~5 minutes | Business-critical applications like e-commerce or financial services |

Global load balancers further enhance performance by routing users to the nearest region, reducing latency and improving the user experience [6][10].

Redundancy is the cornerstone of high availability. Every critical component - whether it's a database, metadata server, or application tier - should be replicated across independent failure domains [5][2]. A single point of failure (SPOF) arises when one resource has the potential to bring down an entire application tier. The solution? Redundancy.

"Redundancy means that multiple components can perform the same task. The problem of a single point of failure is eliminated because redundant components can take over a task performed by a component that has failed." - Oracle Cloud Infrastructure [1]

Failure domains can range from individual hardware components and virtual machines to entire zones or geographic regions [5]. To ensure continuity, redundancy must be designed across all levels. For example, if a virtual machine, a zone, or even an entire region fails, redundant resources should immediately take over.

Testing these mechanisms is just as important as implementing them. Regular "fire drills" simulate zone or region failures, helping validate that replication and failover systems work as expected [5][2]. Automated recovery processes are also key to meeting low Recovery Time Objectives (RTO), ensuring systems recover quickly without manual intervention [7][8].

To ensure uninterrupted cloud operations, certain key components play a crucial role. These elements work together to address potential failure scenarios, forming the backbone of any strategy aimed at maintaining continuous uptime.

Clustering application servers creates a unified system where multiple servers work together. If one server goes down, others seamlessly take over its workload, avoiding bottlenecks or single points of failure. This setup relies on tools like resource agents, fencing mechanisms, and quorum logic to keep services running smoothly [12].

A crucial fencing tool, STONITH ("Shoot The Other Node In The Head"), ensures failed nodes cannot corrupt shared resources [12]. For better reliability, clusters should have an odd number of nodes - commonly three or five. This configuration ensures quorum can be reached during network splits, with at least (n+1)/2 nodes required to make decisions [13].

Reliable data management is a cornerstone of high availability. Database replication ensures your data exists in multiple locations, with a primary node managing writes and replicas handling reads. If the primary node fails, one of the replicas automatically takes over, often within 10 to 30 seconds in well-tuned systems [11]. This quick failover minimizes recovery time and data loss.

Replication can be configured in different modes:

To avoid "split-brain" scenarios - where multiple nodes mistakenly believe they are the primary - deploy a witness node. This lightweight resource participates in leader elections without storing data. Tools like Orchestrator, Patroni, or pg_auto_failover automate failover processes, while connection proxies like ProxySQL ensure traffic is directed to the correct primary node without requiring application changes [13][14].

Auto-scaling allows your system to adapt to changing demand by adjusting the number of active servers in real time. This ensures responsiveness during traffic spikes while minimizing resource use during quieter periods. Horizontal scaling is particularly effective in maintaining availability during sudden surges [3][6].

Load balancers work hand-in-hand with auto-scaling to manage traffic. If one zone experiences an outage, load balancers redirect traffic to healthy instances elsewhere, ensuring uninterrupted service. Two types of load balancers are commonly used:

These combined strategies strengthen your cloud architecture, keeping it resilient against unexpected failures and traffic fluctuations.

SurferCloud operates a network of over 17 global data centers, allowing for resilient and geographically distributed architectures. To maximize reliability, treat each data center as an independent failure domain, complete with its own infrastructure. This approach minimizes the risk of correlated outages [1][6].

Start by defining your availability goals and acceptable downtime. Deploying across multiple zones within SurferCloud is a proven way to reduce downtime. For systems requiring "five nines" reliability - equating to about 5.26 minutes of downtime annually - multi-region deployments are essential [17].

"Composition is important because without it, your application availability cannot exceed that of the cloud products you use."

– Google Cloud Architecture Center [4]

SurferCloud's global load balancing ensures automatic traffic rerouting in case of an issue. For cross-region failover, set your DNS Time-to-Live (TTL) to 30–60 seconds. This ensures clients can quickly switch to healthy endpoints during outages [15].

Define your Recovery Time Objective (RTO) and Recovery Point Objective (RPO) for each application tier. These metrics will help determine whether synchronous replication (for zero data loss) or asynchronous replication (for better performance over long distances) is more appropriate [4][7][6]. A distributed setup like this takes full advantage of SurferCloud's compute, networking, and storage capabilities.

SurferCloud's elastic compute servers play a key role in eliminating single points of failure. To ensure uninterrupted service, replicate critical components across both network and application layers [16].

Spread compute instances across multiple zones and regions to maintain service during localized outages [2][5]. Within a single availability zone, distribute instances across distinct fault domains - groups of hardware that share infrastructure - to mitigate risks from hardware failures or maintenance activities [1].

Set up load balancer health checks to quickly detect and redirect traffic from failing instances. For example, the total outage duration can be estimated using this formula: DNS TTL + (Health Check Interval × Unhealthy Threshold). Adjust these settings carefully to avoid unnecessary rerouting caused by brief network hiccups [15].

Use instance templates to maintain consistent configurations across zones, ensuring streamlined redundancy [7][15]. Periodically run "fire drills" to simulate zone outages and test your failover processes [2][5]. Additionally, make sure your data plane remains operational during disasters to support critical functions [7].

With compute redundancy in place, integrating networking and CDN services can further improve uptime and performance.

Building a high availability architecture requires seamless integration between SurferCloud's networking, CDN, and database tools. Start by configuring geolocation DNS routing to direct traffic to the nearest healthy regional load balancer based on the user's location [15].

For databases, choose a replication strategy that aligns with your RPO. Synchronous replication across zones ensures zero data loss but may impact performance. Asynchronous replication, on the other hand, enhances throughput in multi-region setups, tolerating minimal data loss [4][7]. When designed correctly, multi-region database setups can achieve 99.999% availability [7].

SurferCloud's CDN services reduce the load on origin servers by caching content at edge locations closer to users. This not only improves performance but also provides an additional layer of redundancy since cached content remains accessible even if origin servers go offline [18].

Health check probes should originate from at least three different regions to reliably detect outages and avoid false alarms [15]. Use Infrastructure as Code tools to maintain consistent networking configurations, SSL certificates, and database settings across data centers. This prevents configuration drift during failover scenarios [15][18].

| Deployment Architecture | Availability Target | Annual Downtime | Protection Level |

|---|---|---|---|

| Single-Zone | 99.9% | 8.75 hours | Individual component failures [4][10] |

| Multi-Zone | 99.99% | 52 minutes | Entire data center outages [4][10] |

| Multi-Region | 99.999% | ~5 minutes | Large-scale regional disasters [10] |

Ensuring high availability doesn’t stop at deployment - it’s an ongoing process that relies on consistent monitoring, thorough testing, and continuous fine-tuning. This proactive approach helps track crucial metrics and resolve issues quickly, minimizing the risk of unexpected failures.

Pay close attention to metrics like request latency, throughput, error rates, CPU usage, memory consumption, network latency, packet loss, and disk IOPS to identify and address potential problems early [19][20]. For databases, keep an eye on query duration, timeouts, and storage capacity [19][20]. If you’re aiming for 99.99% availability, keep in mind that this allows for just 8.64 seconds of downtime per 24-hour period [20].

"The only error recovery that works is the path you test frequently."

– AWS Documentation [23]

Simulating failures is another essential part of preparation. Use chaos engineering tools to mimic zone outages, database failures, and network disruptions. These exercises not only validate automated failover mechanisms but also help uncover blind spots in your monitoring systems [22][21]. Regular simulations like these equip your team to handle real-world incidents more effectively, reducing the chances of human error during critical moments [22]. When combined with a strong architectural foundation, these practices ensure every system component delivers reliable performance.

Cost and performance optimization are equally important. Implement auto-scaling to adjust resources dynamically, which can cut costs by up to 30% during off-peak times. Right-sizing compute instances to maintain about 70% utilization further enhances efficiency [24]. Companies that perform regular architectural reviews often experience a 35% boost in operational reliability, while real-time monitoring and alerting can reduce unplanned outages by 40% [24].

To strengthen your monitoring practices, use correlation IDs to trace requests across services and deploy health probes from multiple regions to catch external issues quickly [19]. Employ asynchronous logging to avoid request bottlenecks, and separate audit logs from diagnostic logs to prevent dropped transactions during periods of high traffic [19]. These continuous efforts in monitoring, testing, and optimization form the backbone of a resilient high availability system.

Ensuring high availability goes beyond just deploying redundant servers; it requires addressing potential failure points at every level of your infrastructure. As Cloud Architect Riad Mesharafa explains:

"High availability implies there is no single point of failure. Everything from load balancer, firewall and router, to reverse proxy and monitoring systems, is completely redundant" [16].

This involves meticulously coordinating components like load balancers, application servers, databases, and network systems across multiple failure domains to minimize risks.

SurferCloud exemplifies these principles with its robust global network. The platform's commitment to 99.95% service availability and 99.9999% data reliability ensures that your critical applications remain operational [26][27]. Features such as UVPC cross-availability zone subnets provide seamless disaster recovery within a single region, while kernel hot patch technology allows for security updates without interrupting server operations - even during maintenance windows [25][26][27]. Additionally, SurferCloud's elastic compute servers, built-in load balancing, and automated scaling simplify achieving high availability without adding unnecessary complexity or expense [27].

Reliability refers to a system's capability to consistently deliver expected performance under normal conditions. This includes meeting critical service-level goals such as uptime, response times, and maintaining low error rates. Achieving reliability often involves strategies like building in redundancy, designing fault-tolerant systems, and implementing automated monitoring and recovery tools.

Resilience, meanwhile, focuses on a system's ability to bounce back quickly from disruptions like hardware malfunctions, network failures, or configuration errors. It emphasizes dynamic recovery techniques, such as rerouting traffic or scaling resources, to keep service interruptions to a minimum.

Platforms like SurferCloud combine reliability and resilience within their high availability architecture, ensuring systems not only run smoothly during everyday operations but also recover efficiently when unexpected challenges occur.

Distributing workloads across various geographic regions or availability zones strengthens system reliability by confining issues such as outages or natural disasters to a single location. If one region encounters a failure, traffic is seamlessly redirected to other functioning regions, ensuring reduced downtime and consistent availability.

This strategy also builds fault tolerance and supports maintaining high uptime, serving as a key element in designing a strong cloud infrastructure.

Load balancing is a key strategy for keeping systems dependable. It works by spreading incoming traffic across several servers, zones, or regions. This approach prevents any one resource from being overloaded and ensures that traffic is redirected if a server or region goes offline.

By sharing the workload and rerouting traffic during outages, load balancing helps maintain high availability and reduces the risk of service interruptions. It's a must-have for creating resilient cloud systems.

In the world of cloud computing, there is a massive dif...

Website loading speed is more than just a technical con...

In recent months, a noticeable shift has emerged in the...