Multi-Domain Reverse Proxy & Load Distrib

? Pain Point Developers running multiple small sites...

Serverless AI training eliminates the need to manage infrastructure, focusing instead on model development. Efficient data storage is key to keeping GPUs and TPUs fully utilized, minimizing idle time, and controlling costs. Here's what you need to know:

Optimizing storage selection, data layout, and access patterns ensures smooth training workflows and cost efficiency. SurferCloud offers scalable solutions to meet these demands with high performance and cost-effective storage options.

Serverless AI training involves managing a variety of data types, each with its own storage demands. Raw training datasets often include unstructured data - like images, videos, audio files, and text documents - that can quickly grow to petabyte-scale sizes. These are commonly stored in object storage to ensure accessibility, even when they’re not in active use. Meanwhile, feature stores house preprocessed data that’s been cleaned and transformed, ready for immediate use in machine learning workflows. Model checkpoints capture the model’s state at specific points during training, acting as recovery markers in case of interruptions. On top of that, logs and metrics monitor training progress, resource usage, and performance indicators, while model artifacts - the final trained weights and binaries - require secure, version-controlled storage for deployment and future reference. Each data type adds unique demands on the storage system, setting the stage for the performance requirements discussed next.

Meeting the demands of serverless AI workloads requires a storage system that excels in performance. First, high throughput is essential to keep GPUs running at full capacity [6][7]. Managed parallel file systems can deliver throughput rates as high as 1 TB/s with sub-millisecond latency, while object storage can also achieve high throughput but typically relies on hundreds or thousands of parallel threads [1][2]. Low latency is equally critical, especially when thousands of GPUs might be accessing model weights simultaneously [4][7].

Additionally, storage systems must scale elastically to accommodate the unpredictable nature of AI workloads. These workloads often involve sudden bursts of intense processing followed by quieter periods [8]. Local storage can help reduce network bottlenecks and avoid costly data transfer delays [6][7].

In addition to performance, durability and cost efficiency are vital considerations. As David Johnson, Director of Product Marketing at Backblaze, explains:

"Training AI models is a delicate, resource-intensive process. Hardware failures, software bugs, and even power outages can derail week-long training runs, wasting precious time and compute resources" [6].

Frequent checkpointing is a practical solution, allowing training to resume from the latest saved state. Geographic redundancy further protects against regional outages, while object locking ensures data isn’t accidentally deleted.

Cost optimization in serverless AI training goes beyond the price of storage itself. A significant expense can stem from GPU idle time caused by storage systems that fail to deliver data quickly enough [1][9]. While high-performance storage might have a higher cost per gigabyte, it can reduce overall training costs by maximizing GPU utilization. Tiered storage strategies offer a way to balance costs - using faster, more expensive storage for active training data while shifting completed checkpoints and archived models to more economical object storage [1][9]. Additionally, egress fees for moving data between providers can become a hidden expense that adds up quickly [6].

When selecting a storage solution, it’s crucial to weigh throughput, latency, scalability, durability, and the total cost - including both storage and compute efficiency. The best choice will depend on your specific workload patterns, data volumes, and budget.

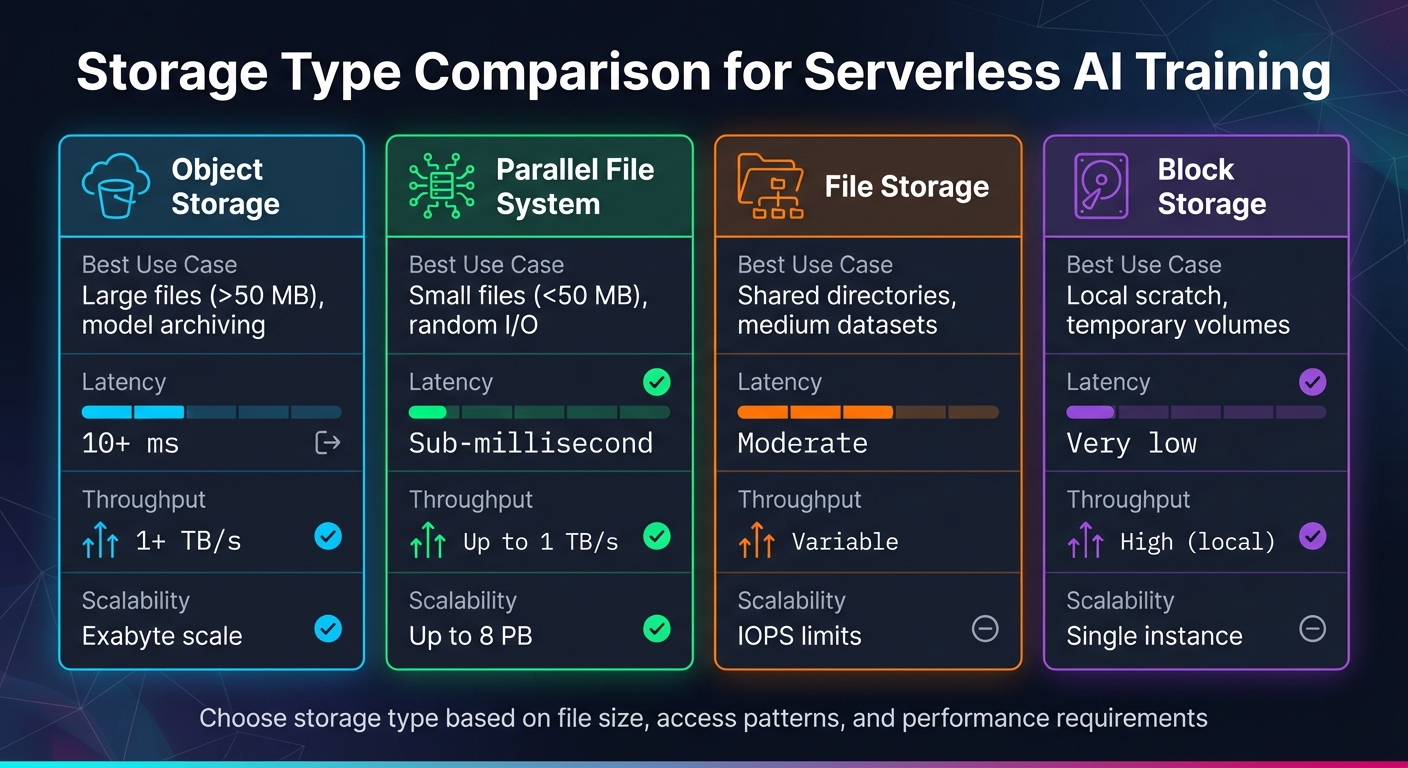

Serverless AI Storage Types Comparison: Performance and Use Cases

Choosing the right storage for your serverless AI workloads depends on factors like file size, access patterns, and performance needs. For large files (typically over 50 MB) and sequential access patterns, object storage is a great option. It offers massive capacity - up to exabyte scale - and throughput as high as 1.25 TB/s, with latencies in the tens of milliseconds. On the other hand, parallel file systems like Lustre shine when working with smaller files (under 50 MB) and random I/O operations. They deliver sub-millisecond latency and throughput up to 1 TB/s, making them ideal for training scenarios that involve numerous small files.

File storage provides a standard POSIX interface for shared directories, but scaling can hit IOPS limits. Meanwhile, block storage is perfect for high-performance local scratch space, though it’s limited to single-instance use.

| Storage Type | Best Use Case | Latency | Throughput | Scalability |

|---|---|---|---|---|

| Object Storage | Large files (>50 MB), model archiving | 10+ ms | 1+ TB/s | Exabyte scale |

| Parallel File System | Small files (<50 MB), random I/O | Sub-millisecond | Up to 1 TB/s | Up to 8 PB |

| File Storage | Shared directories, medium datasets | Moderate | Variable | IOPS limits |

| Block Storage | Local scratch, temporary volumes | Very low | High (local) | Single instance |

Google Cloud’s AI Hypercomputer documentation highlights this:

"Cloud Storage with Cloud Storage FUSE is the recommended storage solution for most AI and ML use cases because it lets you scale your data storage with more cost efficiency than file system services."

For workloads demanding high performance, the Google Cloud Architecture Center emphasizes:

"Managed Lustre is ideal for AI and ML workloads that need to provide low-latency access of less than one millisecond with high throughput and high input/output operations per second (IOPS)."

The key is to tailor your data layout to fully utilize the strengths of your chosen storage type.

Efficient data organization and formatting are critical for speeding up serverless AI training while keeping storage costs manageable. Using columnar formats like Parquet can shrink storage needs and accelerate targeted queries. Small files should be combined into containers around 150 MB to reduce metadata overhead and improve read speeds. Formats like TFRecord (for TensorFlow) or WebDataset (for PyTorch) are particularly effective for bundling thousands of small files into manageable shards.

Techniques like Z-ordering can cluster related data, enabling training pipelines to skip unnecessary data by leveraging statistics on value ranges. Avoid over-partitioning datasets under 1 TB - each partition should hold at least 1 GB. For datasets smaller than 50–100 GB, standard file download methods work fine, but larger datasets benefit from streaming or parallel file systems to minimize delays.

After selecting storage and organizing your data, optimizing access patterns and placement can significantly enhance efficiency. For example, streaming data with Cloud Storage FUSE allows training to begin immediately, cutting down on GPU idle time - a major cost factor in serverless AI training. Using multiple threads for parallel data loading ensures bandwidth is fully utilized, enabling Cloud Storage to deliver high throughput when properly configured.

Placing storage and compute resources in the same zone minimizes latency and avoids cross-region data transfer fees, which typically range from $0.08 to $0.12 per GB. Performance-sensitive workloads can benefit from SSD-backed zonal caches, which offer throughput up to 2.5 TB/s and more consistent latencies. For temporary data like checkpoints, using local scratch space (e.g., instance NVMe SSDs) reduces I/O bottlenecks, keeping GPUs running efficiently.

Additionally, enabling hierarchical namespaces in object storage supports fast directory renames, enabling asynchronous checkpointing without disrupting training. While sequential read patterns work well with object storage, workloads requiring random access should leverage parallel file systems for their lower latencies and higher performance.

Efficient serverless AI training isn't just about performance - it also hinges on secure and cost-effective data management.

Protecting training data requires a multi-layered approach. Using Customer-Managed Encryption Keys (CMEK), you can maintain full control over data at rest across object storage and AI training platforms [12]. Role-Based Access Control (RBAC) is another key strategy: assign the minimum permissions necessary, such as objectViewer for read-only access or objectAdmin for managing checkpoint buckets, and use dedicated service accounts for added security [12][10].

To prevent unauthorized data access, leverage VPC Service Controls to safeguard AI resources against data exfiltration [12]. But security isn't just about keeping data safe - it's about ensuring its integrity. Regularly validate data formats, ranges, and distributions to guard against data poisoning [12]. Keep detailed logs of all API calls and track dataset lineage to meet compliance requirements [12]. As highlighted by CISA:

"Data security plays a critical role in ensuring the accuracy, integrity, and trustworthiness of AI outcomes" [11].

Organizing data with automated policies is another way to enhance security while also keeping costs under control.

Cut storage costs by aligning data storage with access patterns. Begin by categorizing datasets based on their retention and access needs [14]. Use automated lifecycle policies to transition data to lower-cost storage tiers or delete it when it’s no longer needed [14]. For workloads with unpredictable access patterns, intelligent tiering features can dynamically move data between high-usage and infrequent-access tiers in real time [13][14].

For active training checkpoints, store data on high-performance systems like Managed Lustre to ensure quick recovery. Once the training is complete, export these checkpoints to cost-efficient object storage for long-term retention [1]. Set clear retention periods to delete outdated data, avoiding unnecessary expenses [14][15]. Before archiving data in cold storage, compress and aggregate files to maximize storage efficiency [14].

These strategies naturally tie into the cost-saving features offered by SurferCloud.

SurferCloud's storage solutions are designed to balance performance and cost. For active training data, the Standard storage class delivers high-speed performance. Meanwhile, Nearline, Coldline, and Archive classes offer progressively lower-cost options for less frequently accessed data [3]. Keep in mind that retrieval fees apply to these lower-cost tiers, making them better suited for archiving models rather than active training [3][15].

With Autoclass features, SurferCloud can automatically assign storage classes based on actual access patterns. This eliminates the need for manual tracking and ensures you’re not overspending on rarely accessed data [3][15]. Use cost-allocation tags across all resources - like storage buckets, compute instances, and endpoints - to monitor expenses for individual AI projects [15]. Avoid redundant storage operations that could rack up extra fees [10]. SurferCloud also offers 24/7 expert support and scalable resources, helping you fine-tune storage configurations without sacrificing the performance your serverless AI workloads require.

Successful serverless AI training relies on aligning storage architecture with the specific demands of each training phase. For instance, parallel file systems like Managed Lustre provide lightning-fast sub-millisecond latency for handling small files, while object storage offers the scalability needed for massive datasets, delivering up to 1 TB/s throughput to ensure GPUs and TPUs remain fully utilized[1][2].

Fine-tuning data formats and access patterns can significantly enhance training efficiency. Techniques like bundling small files and leveraging SSD-backed caching have been shown to increase training speed by up to 2.2× and throughput by as much as 2.9×[5]. These optimizations not only accelerate workflows but also help cut compute costs by reducing idle time for accelerators. Such improvements pave the way for streamlined and cost-conscious data management.

Equally important are robust security measures and cost-control strategies. Employing strong identity and access controls, encryption protocols, and secure network isolation ensures your training data stays protected. Meanwhile, automated lifecycle management and storage tiering can lower expenses by shifting less frequently accessed data to more affordable storage options.

Bringing these strategies together, SurferCloud provides an infrastructure tailored for efficient serverless AI training. Its high-performance storage solutions, automated Autoclass tiering, and scalable operations across 17+ global data centers deliver the throughput needed for active training while keeping long-term storage costs in check. On top of that, SurferCloud offers 24/7 expert support to fine-tune configurations, ensuring optimal performance, security, and cost-efficiency.

When assessing serverless AI data storage, it's crucial to keep an eye on a few key performance metrics. These include storage capacity, latency, throughput (measured in bandwidth or IOPS), durability (such as 99.999999999% data durability), and availability. Monitoring these ensures your data stays accessible, secure, and runs smoothly - especially during AI model training.

Paying attention to these factors allows you to fine-tune your storage setup to meet the demands of serverless AI workloads while ensuring both reliability and scalability.

To make serverless AI model training more efficient, organizing and storing your data properly is key. Start by opting for a scalable object storage system, such as SurferCloud, to centralize your training files. Object storage systems are great for handling data streams because they provide high throughput and low latency.

To enhance read efficiency and reduce overhead, combine smaller files into larger, sequential formats like TFRecord or Parquet. It’s also a good idea to structure your storage using clear folder hierarchies. For instance, separate folders for training, validation, and checkpoints can help keep things organized. Compressing your data where possible is another smart move - it cuts storage costs while still ensuring quick access during training.

These strategies not only help you scale efficiently but also cut costs and make the entire training process smoother for serverless AI models.

Keeping storage costs in check during serverless AI training can be challenging, but a few smart strategies can make a big difference:

These simple yet effective steps can help you manage storage efficiently, keeping costs under control while ensuring your data remains accessible and ready for use.

? Pain Point Developers running multiple small sites...

Reserved Instances (RIs) are a cost-saving option for c...

Yes, it's entirely feasible to run a Linux operating sy...